#PYTHON XGBREGRESSOR OBJECTIVE CODE CODE#

Running with different custom objective functions, described in the code block above.Replacing the objective function with a function which only returns vectors of ones, with length equal to y_true and y_pred.# prediction result:, no different from global baseline. In fact, since its inception, it has become the 'state-of-the-art machine. XGBoost is well known to provide better solutions than other machine learning algorithms. Regardless of the type of prediction task at hand regression or classification. Implement crossvalscore with the model, X, y, scoring, and the number of folds, cv, as input: 3. XGBoost is one of the most popular machine learning algorithm these days. The steps are the same, except for initializing the model: 1. # Result is different when compared to running with objective=one_obj. Now let's use cross-validation with XGBRegressor. Also, `grad` and `hess` do not change between different calls to logcoshobj.

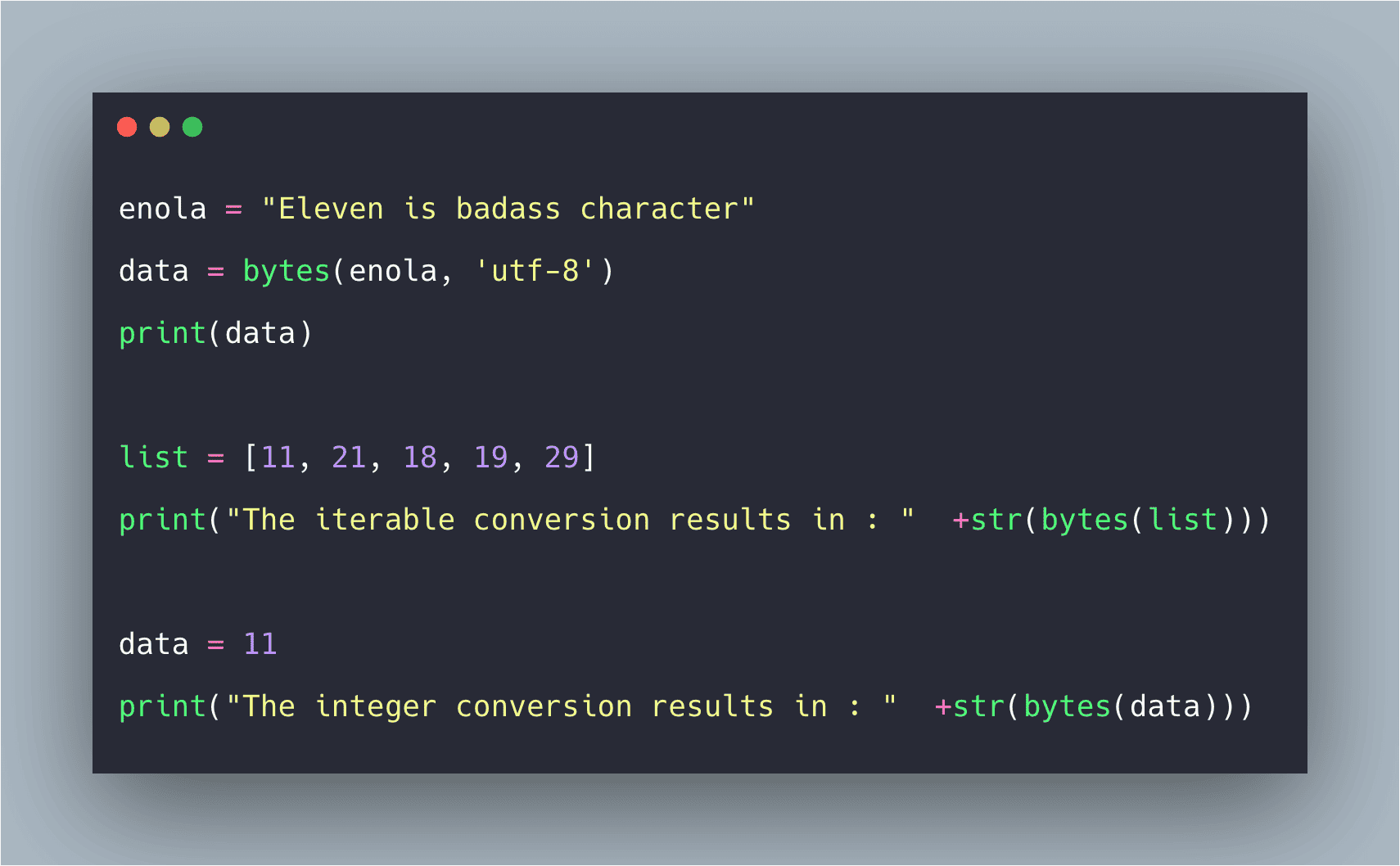

# Result is the same as running with objective=zero_obj, although the `grad` and `hess` computed are non-zero. :import xgboost as xgb read in datadtrain xgb.DMatrix('demo/data/')dtest xgb.DMatrix('demo/data/') specify parameters via mapparam. Return np.zeros(length), np.zeros(length) # 4: Fit a small dataset to a small result set, and predict on the same dataset, expecting a result similar to the result set. # 3: Create a XGBRegressor object with argument "objective" set to the custom objective function. # When reg.predict(X) runs, the gradient computed by the objective function logcoshobj is printed, and is non-zero. Programming languages like R and Python provide a great solution for data processing, data cleaning, data visualisation and Machine Learning modelling.

Arguments (y_true, y_pred), return values (grad, hess).

0 kommentar(er)

0 kommentar(er)